(96)

Google Images search results for “AI”.

(97)

“Generative Adversarial Networks, or GANs for short, are an approach to generative modeling using deep learning methods, such as convolutional neural networks. Generative modeling is an unsupervised learning task in machine learning that involves automatically discovering and learning the regularities or patterns in input data in such a way that the model can be used to generate or output new examples that plausibly could have been drawn from the original dataset.” Source: This article.

(98)

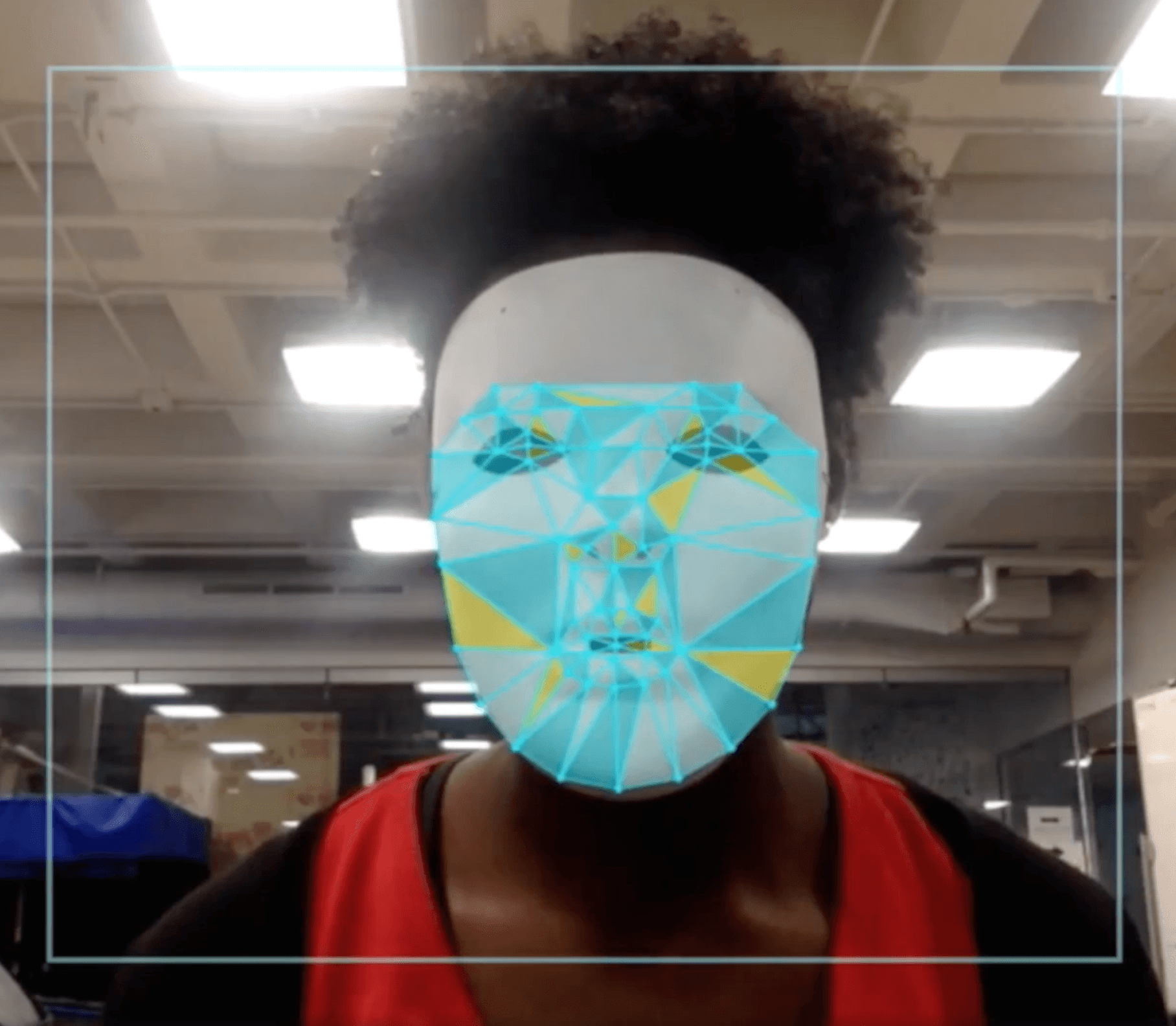

Machine Learning Bias project by Nushin Yazdani

(99)

In her TED-talk, MIT grad student Joy Buolamwini talks about the problem she discovered when working with facial analysis software and her fight against bias in machine learning, a phenomenon she calls the “coded gaze.”

(100)

The Library of Missing Datasets (2016) is a mixed-media installation by Nigerian-American artist Mimi Ọnụọha. Image from her website.

(101)

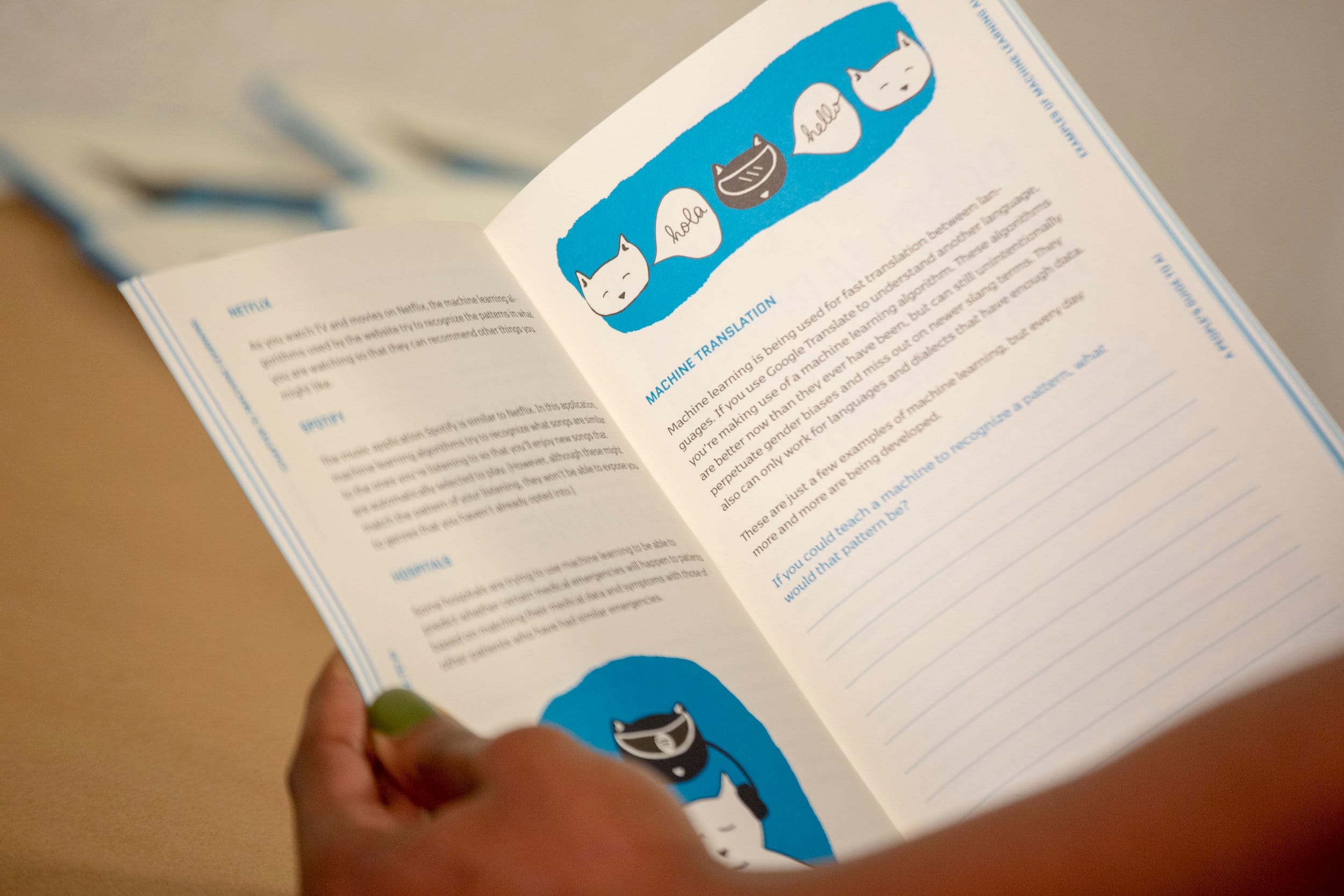

A People’s Guide to AI (2018) by Nigerian-American artist Mimi Ọnụọha. Image from her website.

(102)

Science fiction becoming reality: Released in 2002, Dystopic science fiction film Minority Report set in Washington D.C 2054 depicts a specialised police unit “Precrime”, where future criminals are apprehended based on visions of three psychics.

(104)

The Feminist AI Ethics Toolkit is the Bachelor thesis project by Nushin Yazdani, posing the question: “How do we create a framework for the creation process of algorithmic decision-making tools that adequately counteracts unjust systems and considers ethical concerns, consequences, and causalities?” Read more on her website where the image is also from.

(105)

How Normal Am I? Let an AI judge your face and help answer that quesion.

(106)

“Design justice rethinks design processes, centers people who are normally marginalized by design, and uses collaborative, creative practices to address the deepest challenges our communities face.” The Design Justice Network Principles is a living document providing guidance and resources for people working in the design field.

(107)

“The aim of the field guide is to help designer’s develop power literacy. This includes building up your awareness of, sensitivity to and understanding of the impact of power and systemic oppression in participatory design processes. You will gain a holistic understanding of power, while examining the role you play in reproducing inequity—however unintentional—and what you can do to change this.” — A Social Designer’s Field Guide to Power Literacy

(108)

Stephanie Dinkins’ Not the Only One is a multi generational memoir of a black American family told from the perspective of a custom deep learning artificial intelligence (in the form of an interactive sculpture).

(109)

(110)

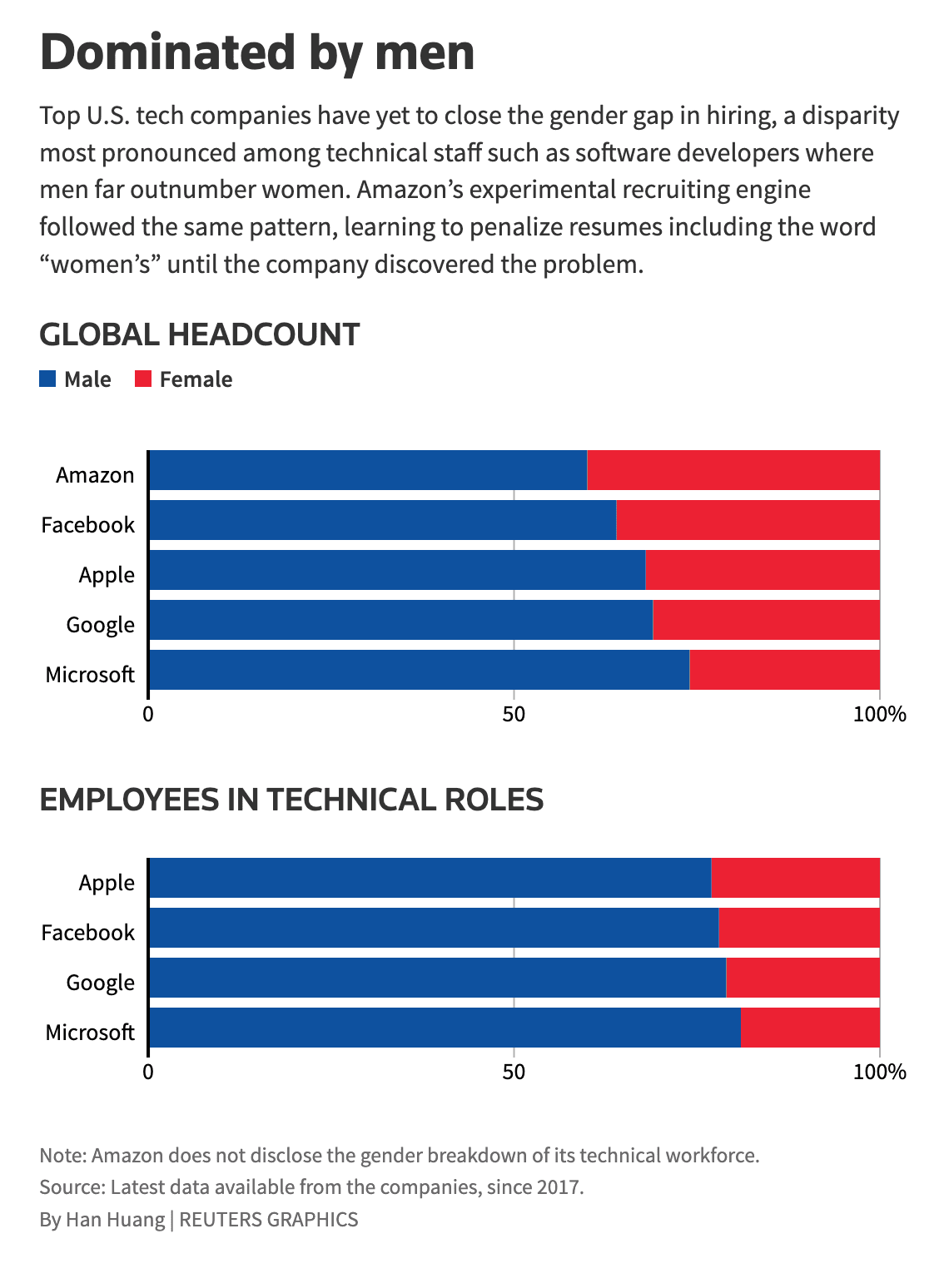

This Reuters article elaborates a bit on the case, including the below graph of the state of gender balance in U.S. Tech companies.

(111)

Translated from this German article: “Max versus Murat: Poorer grades in dictation for primary school children with a Turkish background. A new study shows that primary school children with foreign roots are given lower grades in German by prospective teachers – for the same performance. This is what researchers from the Chair of Educational Psychology at the University of Mannheim found out.”

(112)

The OUTPOST event “OPACITY” with Stefan Kruse and Iyo Bisseck was about this very subject.

Discriminating Machines and Design Justice — Learning from Nushin Yazdani

Chapter 2: Self

September 2022

Words by Bethany Rigby

A lot of what comes to mind when we imagine AI is not yet reality. Science fiction narratives since the 1950’s proliferated futuristic visions of 21st Century humanoid robots, fully automated travel and omnipotent military machines- but this is not an accurate depiction of current AI.

Use of machine learning (deep learning, neural networks) is creeping into social structures and institutions intending to streamline clunky administrative processes usually enacted by people, often resulting in the reinforcement of social biases upon which algorithms were trained. AI systems are frequently referred to as ‘black boxes’, often resulting in acceptance of flawed methods due to the opacity of their operating systems. As they are often collaborators and empathetic communicators, designers are uniquely positioned to see through this density, placing designers in good stead for working against such systems and creating imaginative alternatives.

Placing herself at the intersections of design research, transformation design, project management and machine learning, Nushin Yazdani presents a toolkit for recognising and combatting algorithmic discrimination and the case for why AI justice concerns designers. Her talk at the Tangle pivoted between sharing critical resources and holding time for reflection on our own position as audience members. Through both methods we gained a critical insight into machine learning and social bias- knowledge that feels vital in this era of increased machine experimentation and implementation. Here, we pass on some of this knowledge.

A series of case studies on AI Justice spanning the sectors of the criminal justice system, the workplace, and machine vision, collated by Nushin Yazdani:

The Criminal Justice System

Using US mugshots that are released into public domains, Yazdani trains a GAN to produce AI generated portraits of future criminals- asking the question: what can we tell about future inmates from the faces of current convicts? Driving this work is the increased deployment of machine learning algorithms in prosecution sentencing, where machines enact predictive policing to determine sentence length. “Here we can ask – What does it mean to create a dataset out of photos stolen from www.mugshots.com, a privately owned website that makes you pay for erasing your own mugshot, name and even addresses? Who can afford to pay, who will stay in this grotesque database forever, and, in this case, will give their face to creating a new generation of prisoners?” — Nushin Yazdani

As examined in this Propublica article, a US algorithm assists prosecutors in deciding the likelihood of a defendant’s future crimes by generating a score that can impact decisions on sentence length, severity and bail costs. These risk assessments were found to unjustly classify black people as twice as likely to be repeat offenders- with score results on reoffending proving unreliable in forecasting violent crime; only 20% of the people predicted to commit violent crimes actually went on to do so.

The Workplace

Yazdani asks: what are the parameters for discrimination within a job application? Skills? Previous workplaces? Grades? Schools attended? Recent promotions? Increasingly under-pressure HR departments are employing automated CV screening, and as has been shown in numerous case studies, many instances have reinforced biases against minorities in their selection of ‘ideal’ candidates.

In Germany, replica dictation exam results with one difference: the name of the examinee, were graded by machines. Depending on whether the examinee name was Max (a typically ‘strong’ male germanic name) or Murat (Turkish name) the results varied hugely and stated lower scores for Murat- even though the answers on the test were identical.

In 2018, an Amazon AI tool for hiring practices was deemed sexist after women were repeatedly denied job opportunities. In this instance, the machine was trained on 10 years of recruiting data from successful and failed applications- where historically men were favoured over women. It went so far to penalise candidates that mentioned the word ‘women’ (“as in women’s chess club champion”) and downgraded applicants who had attended some womens-only colleges. In effect, Amazon’s system taught itself that male candidates were preferable, and ultimately reflects the male domination that exists within the tech industry. Here, Yazdani points to the fact that the machines themselves are not inherently bias but that “data is human made”, and machines therefore compound the issues within that data.

In Machine Vision

Historically, film cameras captured white faces far more accurately than black faces. It was only when mahogany furniture retailers required improved detail on their product images did increased quality for all skin colours begin to be developed. This trend however was not restricted to analogue lenses, and history repeated itself with the development of digital cameras and facial recognition devices.

Joy Bwolamini is a poet of code who uses art and research to illuminate the implications of artificial intelligence, and has conducted vital work interrogating facial recognition software used by corporations such as IBM, Face++ and Microsoft. Her research project ‘Gender Shades” uncovered that all companies performed better at accurately recognising males than females with an 8.1% – 20.6% difference in error rates, and when the results are analysed by intersectional subgroups – darker males, darker females, lighter males, lighter females – we see that all companies perform worst on darker females.

Yazdani goes on to note however, that actioning solutions to these issues without care can be a form of violent data colonialism. For example; Chinese tech companies have partnered with the Zimbabwean Government on a surveillance programme of the population in return for images to diversify machine learning datasets. In a patriarchal and capitalist society a “solution” both comes from within and reinforces neocolonial exploitative infrastructures. “Data is data of the past, it is not looking to the future” (Yazdani, POST Design Tangle)

Inspirational works that strive towards more just futures:

Feminist AI Ethics Toolkit by Nushin Yazdani

Design Justice Network and Principles

A Social Designer’s Field Guide to Power Literacy

Not The Only One by Stephanie Dinkins

The thumbnail image is from the project Dreaming Beyond AI by Nushin Yazdani and Buse Çetin. The platform is coded by Iyo Bisseck.

Back to grid